All learning occurs within constraints: some constraints are codified within formal educational systems, others are often unarticulated, emergent in personal life, or codified in aspects of life unrelated to education (e.g. economics or politics). Whilst informal learning falls into this latter category, the constraints surrounding formal and informal learning are nevertheless entwined. For example, the formal education system characterises the learning of students as constrained by the conventional regulatory mechanisms of the education system (classrooms, curriculum, timetable, assessments, etc), whilst the learning of teachers and managers, together with much of the learning of the students within the system is subject to constraints which extend into society at large. What are the constraints of learning beyond the institution? How can we investigate them? What are their dynamics? What measures might governments take to manipulate them to improve society?

My research approach to informal learning has been focused on identifying and understanding the dynamics of the various constraints within which learning occurs. This has been a practical, theoretical, technological and pedagogical project, involving experiments in teaching and learning as much as theory-building: Informal learning presents issues which challenge the separation between teaching and research, and more fundamentally issues of social equity, status, polity and the nature of the relationship between education and society. Since Universities are tied-up in mechanisms of status and equality, my research into informal learning has trodden a difficult line between the formal constraints of academic discourse, curricula, publishers and active pedagogical engagement. However, this presents epistemological and methodological challenges which have formed a major part of my research.

My practical engagement ranges from attempts to increase flexibility, self-organisation and personalisation in formal assessment in Bolton’s Computing department, innovative engagements with students to exploit their informal learning to enhance employability, to a Masters framework we established at Bolton (IDIBL: Inter-Disciplinary Inquiry Based Learning) based on the Ultralab Ultraversity model which provided an opportunity for exploring the constraints existing between ideals and reality in bridging the gap between formal and informal education. In addition to this, I have delivered (semi-formal!) seminars on informal learning and technology to teachers, learners, businesses and artists in the UK and Europe as part of projects funded by the EU and JISC. Throughout this, my methodological approach has been to use cybernetic modelling techniques to generate ideas, explanations and interventions, and then to explore the constraints which are exposed when some ideas work and others don’t.

Informal Learning Research to-date: Personal Learning Environments

The theoretical foundation for the study of constraint is explicitly a cybernetic concern and distinct to the more conventional focus on the identification of causal mechanisms in the light statistically-produced event regularities in education. Cybernetics is a discipline of model-building. Although positivist approaches to modelling envisage their use in explaining or predicting phenomena such as the impact of policy interventions (it is almost certainly impossible to ‘simulate’ the reality of education, although I have usefully experimented with Agent-based modelling techniques in the past), models can simply be viewed as ways of generating ideas, theories, explanations and possibilities for intervention, each of which can be explored in reality.

My early research using modelling approaches involved personal technologies to bridge the gap between informal and formal learning. Principal amongst these was the Personal Learning Environment (PLE), a development which grew from the emergence of technological conditions that permitted learner-centred control of technology (as opposed to institution-centred control) with consequent new possibilities for individuals to coordinate informal learning, personal and social communications, and formal learning commitments. These developments attracted attention from government and EU policy makers who made considerable investments in exploring the potential of the new technology. In total, Bolton attracted over 10 large-scale projects from the EU and JISC which explored the ramifications of the technologies between 2004 and 2014.

Theoretically and methodologically, the technical transformations presented significant challenges. Technologies are both constraining of practice (and can be a barrier to learning) whilst also providing new opportunities for informal access to resources and communities. This principal question across all this work was, “How can we understand the social dynamics of these constraints?” In the PLE project (funded by JISC), a cybernetic model, the “Viable System Model” (VSM) (Beer, 1971) was used to both to critique existing organisational and technological constraints, and to generate new possibilities for the organisation of technology and the personal coordination of individual learning. The VSM is a powerful generative idea which articulates the way “viable systems” coordinate the different activities which contribute to the maintenance of their viability: since adaptation is essential to viability, applied to individuals some of these activities relate to what is clearly “informal learning”, some to formal learning, whilst others address more basic requirements for viability.

The PLE project was explicitly focused on generating ideas and exploring new technologies (social software was only just beginning to be important at the time (2005); we produced a prototype system to illustrate the concepts and explore their implications). To explore the extent to which the ideas generate by the PLE models were to be found in reality. I managed a further project called SPLICE (Social Practices, Learning and Interoperability in Connected Environments), which focused on taking these ideas into the outside world. The outcomes from SPLICE helped identify constraints, informing about what did and didn’t work, which in turn led to theoretical refinement in the models. Most significant amongst the findings was the importance of intersubjectivity in informal engagements with technology. Existing cybernetic models tended to view social dynamics as a kind of symbolic processing (Pask’s conversation theory is particularly notable for this), and there was an explanatory gap concerning the less tangible aspects of inter-personal engagement (identity, friendship, alienation, empathy, fear, love, charisma). Strong evidence for the importance of intersubjectivity included the effectiveness of “champions of informal learning practices”. Fundamentally, both the PLE and SPLICE projects sought to create conditions for the transformation of informal learning. Outcomes from SPLICE helped inform the technology strategy at the University of Bolton which I co-authored in 2009.

The importance of the cybernetic focus on constraint has been to support an approach which is informed by failures as much as by successes at the interface between theory and the reality of the lifeworld of education. However, cybernetics is recursive and reflexive, and constraints exist not just between reality and theory, but between theory and method, between method and reality and between the competing discourses about education. Policy itself is a constraint which can lead to the separation of theory and method: for example, where technological developments depart from theoretical foundation. Informal learning was a major theme of subsequent projects (2009 – 2014) which built on PLE ideas, notably the EU TRAILER (Tagging, Recognition and Acknowledgment of Informal Learning Experiences) and the large-scale school-based ITEC project. The experiences of these projects reconfirms the importance of understanding intersubjectivity in informal learning, but also issues of the relationship between informal learning and the “institution of education”, social status, and the aims of policy-making.

Scientific Method and the Philosophical Background behind the Exploration of Constraints in Learning

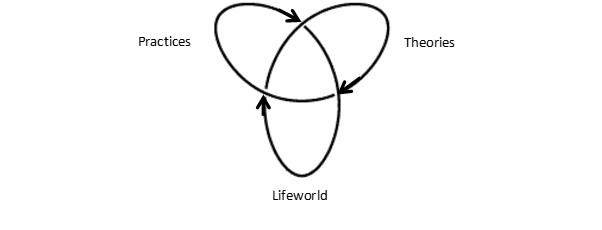

The work around the PLE articulates a research methodology which sits at the heart of epistemological and ontological debates about methods in the social sciences. These have historic origins in the philosophy of science, and particularly the arguments put forwards by Hume concerning event regularities and the social construction of causes. In my PhD of 2011 (on “Educational Technology and Value in Higher Education”), I adopted a Critical Realist position that Hume was wrong to be sceptical about the reality of causes, and there are discoverable mechanisms in the social world (what Bhaskar calls ‘transitive mechanisms’). This was helpful in identifying ‘demi-regularities’ (Lawson) among the phenomena produced by the PLE and in generating possible explanations for their production. However, it failed to go beyond a “theoretical melange” towards more concrete and defensible knowledge which could reliably inform policy. More recently, my work has become critical of the implicit foundationalism in Critical Realism, and I have turned once again to Hume to consider in what ways his radical scepticism about nature might be justified, supported by arguments from ‘Speculative realism’, feminist science studies, and socio-materiality. At the same time I have looked at the scientific approaches of early cybernetics – notably that of Ross Ashby, whose understanding learning as self-adaptation was inherent in his attempts to create an artificial brain in the 1950s. Ashby’s method, as he articulated it, was to be informed in research by identifying the constraints in nature which prevent some theoretically-imagined possibilities being realised. In essence, Ashby’s method is to use cybernetic ideas to generate theories, explanations, and new practices which are then tested in the environment we share (the Husserlian Lifeworld). Between theories, practices and experience are the constraints which the scientific process reveals. I’ve found this mutual constraint most effectively illustrated by the Trefoil knot (itself a feature cybernetic theory) in Figure 1 below:

Complex social phenomena like ‘informal learning’ present constraints not just between observed phenomena and available explanations. Constraints occur in the discourse about explanations (e.g. between Kuhnian paradigms, or Burrell and Morgan’s ‘sociological paradigms’), in the organisation of institutions and academic discourses, journals, and so on; and they occur at the boundary between underlying generative theory, empirical practices and technological developments. Constraints produce “surprises” or irregularities between expectations and experience and demand explanation. In the study of complex social phenomena like ‘informal learning’, different sociological paradigms (e.g. functionalism, phenomenology, critical theory) produce different kinds of approaches and different kinds of results which are often incompatible (i.e. produce “surprises” at the constraint boundaries). “Surprise” drives the creation of new interventions and methods to expose the constraints that produce it whether resulting from theoretical, methodological or practical inconsistency. Indeed, Hume’s regularity theory can be usefully seen through the lens of “surprise” and “confirmation”. Furthermore, the phenomenology of learning processes themselves can also be characterised by “Surprise” and “confirmation” insofar as there is perturbation and adaptation.

The cybernetic analogue of “surprise” and “confirmation” is contained in the way information is understood, and in recent years this has been my focus both in developing new methods and generating new ideas and interventions. Shannon’s information theory provides a calculus for the ‘surprisingness’ within communications. In recent years, I have worked with Loet Leydesdorff at the University of Amsterdam in understanding the relationship between analysis of surprisingness in communication and the development of meaning (this has been Leydesdorff’s main work in understanding evolutionary economics, and which has been highly influential in economic and social policy-making across the world).

Focus on information theory builds on the cybernetic models behind the PLE, but deepens them with more refined approaches to method, and a richer array of empirical possibilities. Most critically, surprises occur against a background, and it is studying this background which has proved most fruitful. The background is variously called ‘constraint’ or ‘absence’; in information theory, it is called ‘redundancy’. The dynamics of redundancy appear to be promisingly powerful in analysing the kinds of highly heterogeneous data that can emerge in informal learning.

The Research Plan: The Enrichment of Theoretical models with Information Theory

Dealing with the complexity and heterogeneity of data in informal learning forms the cornerstone of my research plan. On witnessing the socio-material investigations of Suchman’s human-machine interaction or the educational interventions of Sugata Mitra, we witness occasional ‘surprising’ moments against a background of tentative habitual practices with little happening. Even the coal miner with a copy of Marx’s “Capital” (a classic example of informal learning) would have had a few moments of insight against a background of exhausting labour, managing social commitments, conversation and rest. Whilst the sociomaterial view championed by Suchman, Olikowski or Barad presents ‘entanglement’ as the principal focus for investigation, from a cybernetic perspective, entanglements can be seen as interactions of constraint. Surprising moments appear as an ecological synergy between different processes which individually might not appear to do very much: it’s rather like the flow of rhythm, counterpoint, harmony, melody and accompaniment in music. The question is whether these surprising moments of discovery and development are investigable within coherent theoretical contexts. The approach is to see the relationship between different sources of data as synergistic rather than to attempt to ‘triangulate’ data.

The synergistic approach to data in informal learning research focuses on the redundant ‘background’ to surprising moments. Support for this comes from a number of developments in systems theory, ecology and biology, including:

Recent work in ecology where the dynamics of ecosystems have been studied for the balance they provide between system rigidity and flexibility expressed in terms of information metrics (Ulanowicz, 2009)

The synergetics of Herman Haken (2015) which has focused on the dynamics between redundant information in processes of social growth and development

The Triple Helix of Leydesdorff (2006) which has examined the conditions for innovation by exploring mutual redundancies in discourse dynamics

Emerging systems-biological theories of growth – particularly those of Deacon (2012), but also Kaufmann (2000), Brier (2008), Hoffmeyer (2008) which focus on constraint and absence as a driving force for biological development and epigenesis.

Of these influences, the one I am most close to is that of Leydesdorff with whom I have co-authored a few papers. The synergistic approach to data analysis can be best explained by comparing ‘additive’ and ‘subtractive’ synthesis illustrated in Figure 2. The right-hand side image represents triangulation as the subtraction of data which is not shared between the three dimensions. By contrast, additive synthesis combines the three data sources synergistically. Redundancies may be aggregated in this synergistic way since overlap is a principle of redundancy, and this avoid the problems of the “mereological fallacy” (the confusion of parts for wholes) because the analytical focus is on constraint, not on specific causes or features. Consequently, the technique is suited to examining the large number of dimensions of data which can be associated with informal learning.

Figure 2: Synergistic 'additive' data synthesis (LEFT) vs Subtractive 'triangulating' synthesis (RIGHT) (Diagram adapted from Leydesdroff)

This approach extends cybernetic models by bringing together their underlying theoretical principles with a deeper analysis of constraints. In the cybernetic models of learning produced by Pask (and later Laurillard) which modelled communication from the perspective of codified messages exchanged between teachers and learners – for example, Pask’s conversation theory, Parsons’s ‘double contingency’ of communication, Luhmann’s social systems theory – there were problems in bridging the gap between the model and ‘real people’, and a failure to adapt the model in the light of reality. With a synergistic approach, issues such as empathy, tacit knowledge, and less tangible intersubjective aspects of human communication and learning become available for analysis. Bringing Alfred Schutz’s concept of ‘tuning in’ to the inner world of each other into an analytical frame also presents new perspectives on Vygotskian ZPD dynamics as well as Freirian critical pedagogy.

Potential of Research in the identification of constraints in informal learning and generation of new possibilities, projects and papers

The study of information in education described above is finer-grained than previous cybernetic models which have been used in education (notably Pask/Laurillard conversation theory, and the viable system model of Beer). Having said this, it is also commensurable with those previous models. At the very least, this theoretical re-orientation generates new areas of investigation:

“Intersubjectivity in informal learning”: Schutz’s concept of intersubjectivity (which he developed from Husserl and which influenced a number of educational theorists including Goffman and Bruner) places emphasis on what he calls “mutual tuning-in” to one another – a process which occurs over time. Social relationships which convey little information, but contain much mutual redundancy between the parties are good examples of the kind of thing Schutz was interested in (he made particularly acute observations about the way music communicates). Informal communications using social media also often convey little information, but have much redundancy (e.g. Twitter, Facebook, Dubsmash). They would seem to be ideal opportunities for investigating the link between mutual redundancy and intersubjective understanding. Vygotskian ZPD dynamics and critical pedagogy are also closely related to issues of intersubjectivity.

“Intersubjectivity in formal learning”: Analysis of the constraints around informal learning and social relations can provide new ways of thinking about the relations between teachers and students in formal education, the effective design of learning activities, new forms of assessment and so on.

“Informal learning in new settings”: Technologies provide new ways of creating material constraints within which informal learning behaviour can be studied. For example, the mainstreaming of virtual reality environments will provide opportunities to create rich data streams about learner curiosity, emotions, likes and dislikes. It would be particularly interesting to combine such approaches with research on intersubjectivity. Other material contexts could exploit interactive public artworks using sensors to record data about engagement patterns. The site of such experiments can also be important: use in libraries, museums, galleries, pubs and so on can provide powerful new ways of understanding how human curiosity and adaptation works in different socio-material environments.

“Informal learning, Inquiry and accreditation”: In Inquiry-based learning contexts, the constraints of the curriculum are reduced but assessment is conducted through techniques like Winter’s “Patchwork text”. Researching the ways in which learners might analyse and externalise their own learning dynamics in everyday life can present new assessment strategies which focus on the ways in which intellectual growth and development can be measured and accredited through changes in patterns of communication in everyday life between students, teachers, the workplace, technological practices and so on.

“Analysing Narrative of Informal Learning”: Narrative approaches to educational research, particularly Peter Clough’s “narrative fictions”, are consistent with an approach to analysing constraint. Narratives feel ‘real’ because they reproduce constraints we know from reality. In research in informal learning, reality can get lost behind rhetoric, and to analyse constraints inherent in realistic narrative accounts can be a fruitful way of exposing the dynamics of constraint for informal learning in reality.

“Status and Informal Learning”: The social dynamics of social status has become a major issue of inquiry – particularly research into Higher Education (see Roger Brown). I have recently used John Searle’s social ontology and his concept of “status function” as a way of analysing the PLE, and the dynamics of educational projects within educational institutions more generally. Searle’s linguistic understanding of status is powerful, but I believe currently misses its relationship to scarcity (status are scarcity are related which he acknowledged to me at a talk he gave in Cambridge in May). Education’s production of formal certification is a manufacture of scarcity very much related to current processes of marketization. Informal education is technically ‘abundant’ rather than scarce, and (it seems) does not have the same status. This is a problem concerning the separation between education and society with implications for social equality and deserves much deeper investigation. I believe a deeper understanding of intersubjectivity can enrich Searle’s model whilst providing pointers for more equitable policy.